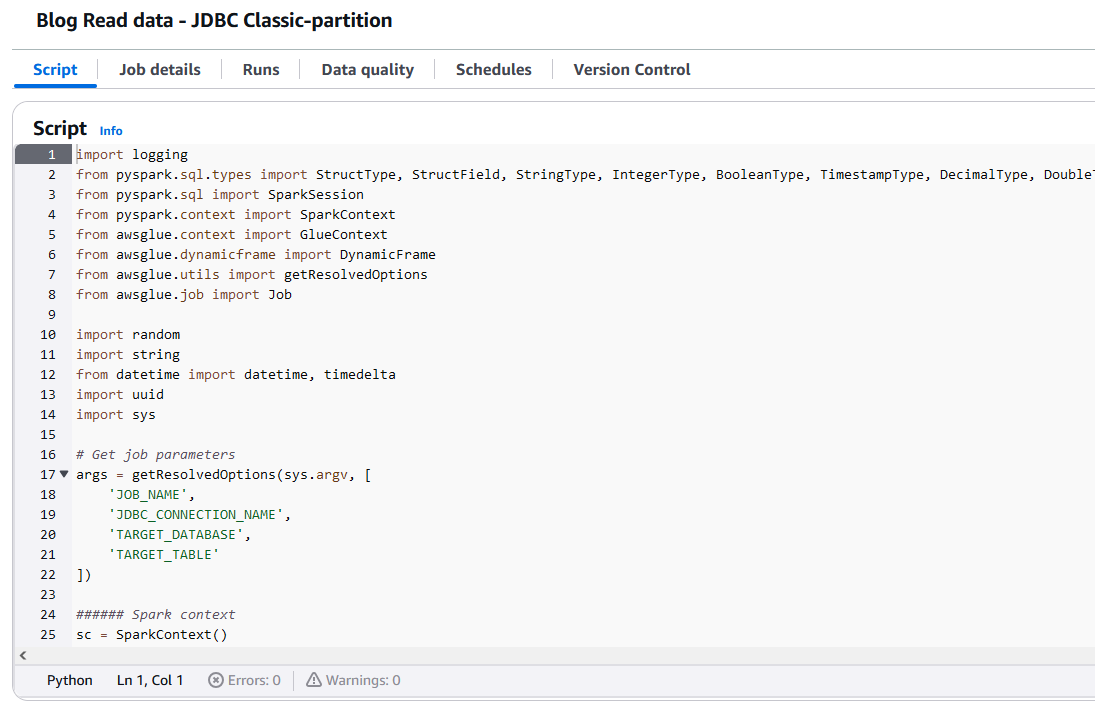

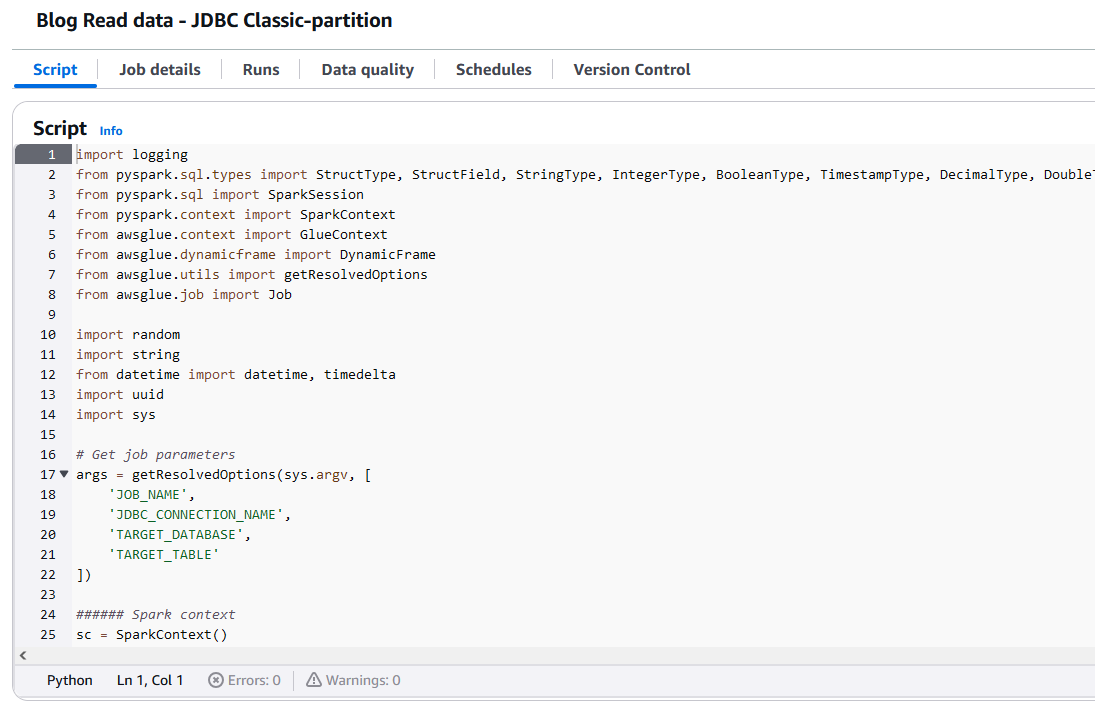

Performance optimization for AWS Glue using Microsoft SQL Server Spark connector

Learn how to optimize AWS Glue ETL jobs using the Microsoft SQL Server Spark connector for improved performance and authentication capabilities

Read More

Learn how to optimize AWS Glue ETL jobs using the Microsoft SQL Server Spark connector for improved performance and authentication capabilities

Read More

Une nouvelle formation Quarkus en ligne est disponible via le site byoskill.com

Personally in 2016, I was tempted to join the FN party to protect my country, I also worked for many ministries, I saw …

Recently I had the following issue with PostgreSQL ( 10+) :

SQL exception : …This article is taken from a discussion I had during the JUGL of Lausanne. It describes how to enable the JPA/SQL …

Since Bintray shut down in 2021, numerous OSS projects have been annoyed with the lack of availability from Maven …

It is time to learn new frameworks to improve your code quality and decrease the time of your testing phase. I have …