In this short story, I will relate how I migrate my blog personal website from a classic VM instance to a container using Google Cloud Container Engine and Kubernetes, Docker, Nginx.

One of my personal goals was also to have a cloud-deployed website without spending any money.

Motivations

Long story made short, I have been using Docker on several projects for one year. I progressively got accustomed to the ease of deployment provided by Docker. The issue? The day I have launched my blog (on February 2017), for time and cost reasons, I picked a VPS instance from OVH.

Why OVH? Clearly, it is one of the cheapest IAAS providers and quite popular there in France. I have been using it for several projects without any major issues.

OVH has an offer of public cloud OVH Public cloud. However, the offer looked immature at that time both in documentation than on reviews. The second reason for my rejection is cloud adoption. A lot of experts are turned toward GCloud and AWS. Spending my efforts on OVH would not provide enough visibility in short term, in my job.

To better accompany my colleagues and customers to adopt the cloud, I have decided to eat my own dog food. And among my personal projects, I have decided to migrate first my blog.

And to switch my blog from OVH to Google Cloud (Container Engine).

Pricing

Here are some interesting articles about pricing and functionalities for the major cloud providers :

Technical situation

My blog is hosted on a VPS server (shared instance on OVH). I have installed on it, Apache 2, some monitoring and security system and Let’s Encrypt to obtain a free SSL certificate.

Hexo command line

My blog is not using classical WordPress, I am quite fond of static website generators and more recently of flat/headless CMS.

I am using HexoJS as a CMS. The main features are you are writing your article in Markdown and the blog has to be regenerated to produce the static files, producing quite optimized pages.

Hexo command line

How to switch from a legacy deployment to the cloud.

These are the explanations of how I proceed to migrate this website.

A) Create my Google Cloud Account

Yes, we have to start from the beginning and I created a new Google Cloud Account. Though it is rather easy to create its account, I have been surprised. I couldn’t pick an individual account.

It’s even in the Google FAQ (FAQ).

I’m located in Europe and would like to try out the Google Cloud Platform. Why can’t I select an Individual account when registering?

The reason (thanks to EU.. ) is dumb as fuck: In the European Union, Google Cloud Platform services can be used for business purposes only

For information, in Switzerland, the limit is lifted.

Interestingly enough, the free trial on Google Cloud has been expanded to 300$ for one year.

B) Discover Google Cloud

Well, the UI is easy to manipulate even with this nagging collapsing menu on the right side.

Google Cloud Console

The documentation is quite abundant but I found two major issues :

- Lack of pictures and schema: most concepts are described with a bunch of words. Fortunately, some very kind people made great presentations (here and here).

- Copy/Paste from the Kubernetes website: yeah most of the documentation can be found on Kubernetes, logically.

- Lack of pieces of information and use cases: for some examples as using this damn Ingress. Why people are not providing Gist 🙂

I created a cluster with two VM instances, 0.6GB of RAM, and 1 core. Indeed I wanted to play with the load balancing features of Kubernetes.

Create a cluster

C) Replicate my server configuration as a Docker container

The easiest and funniest part has been to reproduce my server configuration with Docker and to include evolution. I wanted to switch from Apache 2 to Nginx.

The first solution I created. I used a ready-made (and optimized) container image for Nginx and modified my build script to generate the Docker image. The generated website is already integrated into the Docker image.

FROM bringnow/nginx-letsencrypt:latest

RUN mkdir -p /data/nginx/cache

COPY docker/nginx/nginx.conf /etc/nginx/nginx.conf

COPY docker/letsencrypt /etc/letsencrypt

COPY docker/nginx/dhparam /etc/nginx/dhparam

COPY public /etc/nginx/htmlI made several tests using the command docker run to check the configuration on my own machine.

docker run --rm -i -t us.gcr.io/sylvainleroy-blog/blog:latest -name nginxD) How to host my Docker image?

My second question has been how to store my Docker container?

Creating my own registry? Using a Cloud Registry?

I have used two different container registries in my tests.

First is the Docker Hub.

Docker Hub

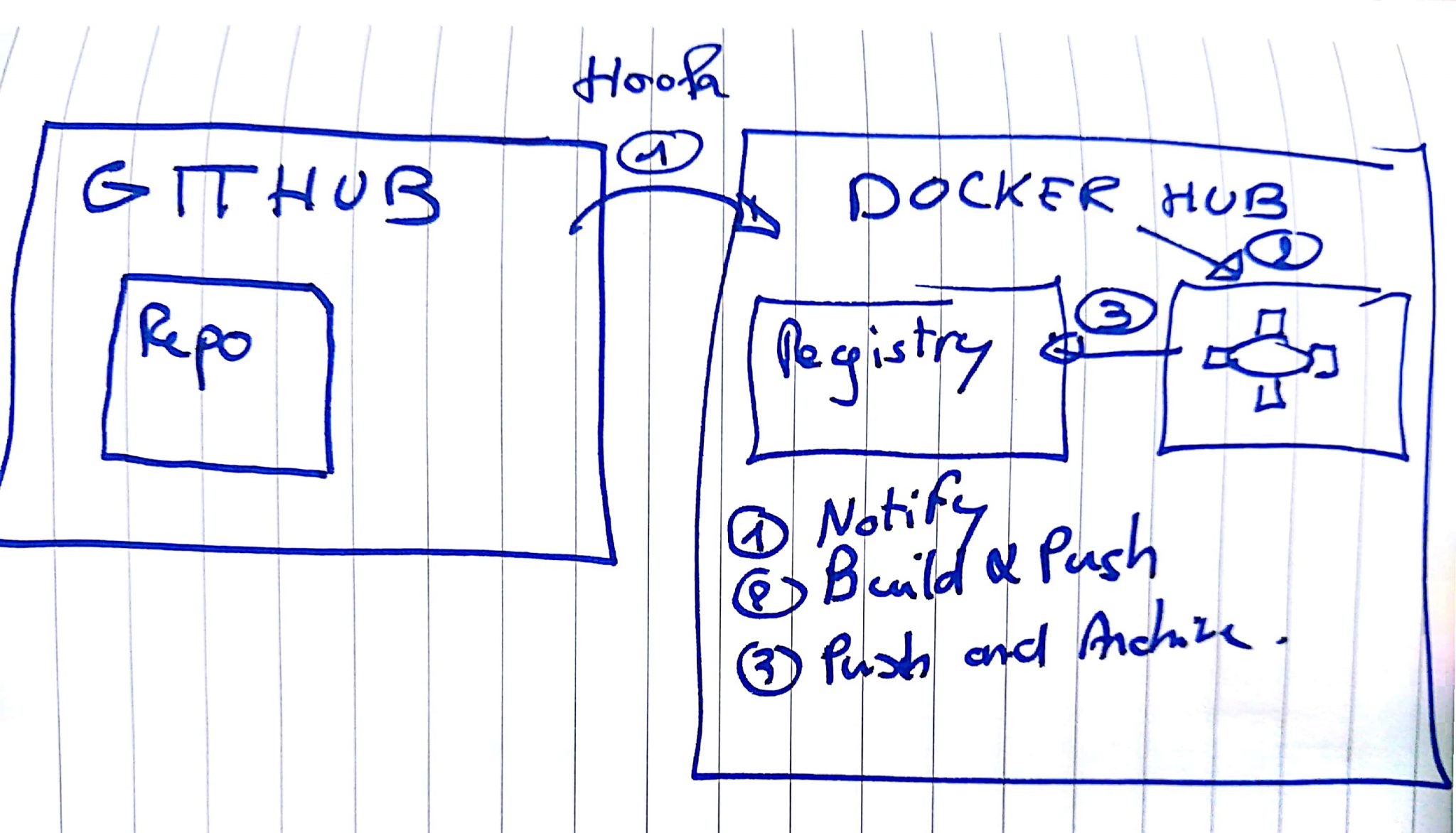

What I appreciate the most with the Docker Hub, is that I can delegate the creation of my Docker images to the Hub by triggering a build from GitHub. The mechanism is quite simple to enable and really convenient. Each modification of my DockerFile is triggering a build to create automatically my Docker image!

Here is a small draw to explain it :

Docker Hub & Builds draw

And some part of the configuration.

However, Google Cloud is also offering a container engine and its usage has been redundant. I kept it to use it with CircleCI.

Therefore, for the time being, I am storing my Docker container on Google Cloud.

Google Cloud Container Registry

With this kind of command :

gcloud docker -- push us.gcr.io/sylvainleroy-blog/blog:0.1

E) The Cloud migration in itself

Maybe it is on my fancy side, but I have only used the GCloud CLI to perform the operations.

Install Google SDK

Everything goes smoothly but don’t forget to install Kubernetes CLI.

gcloud components install kubectl

I had a problem with the CLI. I could not see my new projects (only some part of them) and I had to auth again.

gcloud auth login

And perform a new login to see the update.

Don’t forget to also add your cluster credentials using the GUI instructions (button connect near each cluster).

Google Cluster

gcloud container clusters get-credentials --zone us-central1-a blog

Understanding the concepts of Pod, deployment

It took me time to understand what is a deployment and a pod. Using docker and docker-compose I could not attach the concepts.

That is one of my concerns with Kubernetes, some technical terms are poor and do not really help to understand what is behind.

Well, I finally create a deployment, to create two docker instances inside my pod (replica=2). This deployment file is declaring basically that it requires my previous Docker image and that I want two copies. The selector and the label mechanism are quite handy.

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: blog-deployment

spec:

replicas: 3

template:

metadata:

labels:

app: nginx

role: master

tier: frontend

spec:

containers:

- name: nginx

image: us.gcr.io/blog/blog:0.9

ports:

- containerPort: 80

name: http

- containerPort: 443

name: https I use such commands to create it :

̀kubectl create -f pod-blog.yml

kubectl Pod information

Automating the generation, docker image building, and deployment

I have automated the full cycle of my site generation, docker building and container registry and pod reload using CircleCI.

CircleCI Deployment Schema

And the good thing is that all these things are free.

Feedback

After playing for two weeks with it in my spare time, I have the following feedback :

Rolling Update

The deployment mechanism and how the rolling update is performed are impressive and a time-saver… Some banks are still using a manual way or semi-automated way like Ansible to deploy their software and the rolling updates are performed awkwardly. Here Kubernetes is deploying on the background the new version, controlling its state (roughly) and if the conditions are met, switching from the old version to the new version. I am using this mechanism to bench my Docker new images and push the new versions.

Load Balancing mess

I had to struggle a lot to set up my load balancer. Well, not at begin. Kubernetes and GCloud are describing precisely how to set up a Level-4 LoadBalancer. It takes few lines of YAML and it was fine. However, I had huge difficulties when I decided to switch to TLS and my HTTPS Connection with Let’s encrypt.

I met several difficulties :

- How to register my SSL certificate on a Docker container tough not deployed?

- What the fuck is a NodePort? The difference between ClusterIP and a LoadBalancer and an Ingress?

NodePork

- Where should I store my certificate? in the GCloud configuration or my NGINX?

- Why Ingress is not working with multiple routes?

To address the following issues, I found the temporary solutions :

- I am using Certbot/Let’s Encrypt certification using DNS. That way, I can generate my certificates “offline”.

- I am not sure about the definition of what is a NodePort, either I need a LoadBalancer for a single container in my pod or simply open the firewall. These concepts, introduced with Kubernetes are still obscure for me, even after several read.

- I decided to implement my HTTPS LoadBalancing by modifying my NGINX configuration to store the certificate and rely on a Level 4 LoadBalancer to dispatch the flow.

- I tried really hard to make Ingress working (the level-7 LB) but even the examples were not working for me (impossible to map the port number 0 error) and really bad documented.

Persistent volume

The documentation about persistent volumes is not precise in Kubernetes and GCLoud and has important differences between the implementation and Google and even between versions.

You have many possibilities :

- Use a Persistent Volume, PersistentClaim and attach them to your containers

- Generating directly a volume from your deployment file

Another issue I have met, my docker container was failing (and the pod itself) because the persistent volume created is never formated.

But why ????

Indeed in your deployment file, you have properties to set the required partition format. But no formating will be performed.

And therefore I had the following next issues :

- How to mount something unformatted?

- How to mount something unformatted in a container of the pod without using the deployment?

- Why is there so little documentation in Google Container Engine (in comparison with Google Compute Engine)?

The recommended solution is to create a VM instance by HAND using Google Compute Engine, to mount attach the disk to the instance. To mount it manually and trigger the formatting. WTF

If you have a better way to handle the issue, I am really interested!

Conclusion

After a month of deployment, I haven’t spend a buck. My page response time decreased from 3.4s to 2.56s And I am not waking up during the night, the eyes full of horror thinking about how to reinstall the site. I only have a container to push.

I am not using yet the Kubernetes UI and I don’t see yet the necessity. The CLI offers almost everything.

Cleaning a cluster, the pods, and deployments requires several steps and maybe could be simplified.

Pricing

One very important aspect of my project was also to decrease the bill to host the site.

Currently, here is my bill for 1600 visits per month :

- I have a GitHub private repository (~7$/month)

- I am using the free tier of CircleCI offering me the usage of a Private GitHub repository and an important number of build

- Docker Hub is free for any number of public repositories and 1 private docker repository.

- I am using the free tier of Google and I spent 1$ in one month and the bill is shared between my blog and my other projects.

- I have a cluster of 2 VM for my blog

Compared to my 79€/year for my VPS.

Interesting links

- https://cloud.google.com/container-engine/docs/tutorials/guestbook

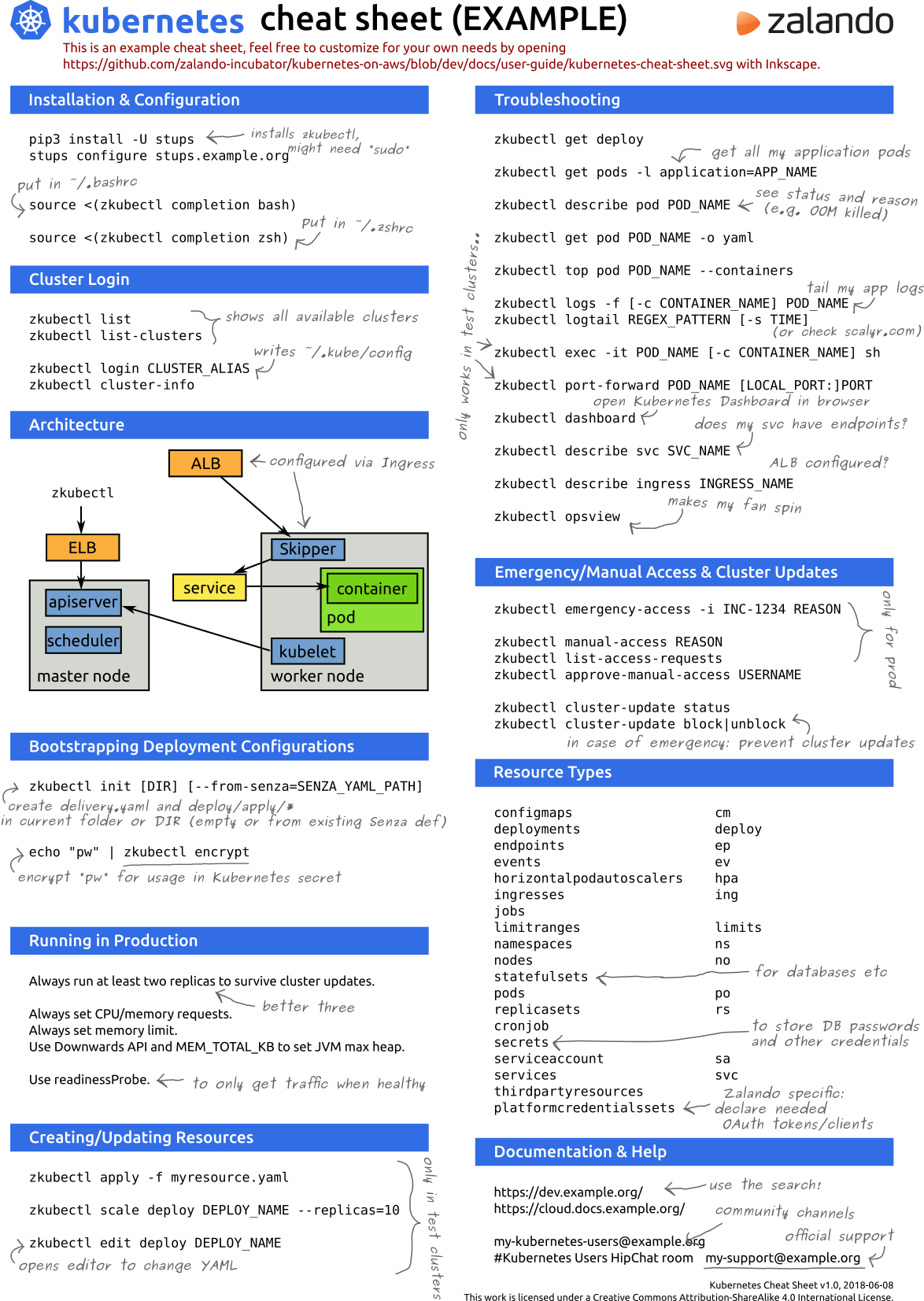

- Cheatsheet

- Cheatsheet