In this article, I present how to write custom Cobol rules with SonarQube and some caveats I encountered. The targeted audience should have some basic compiler knowledge (AST, Lexical analysis, Syntaxic analysis).

Writing custom Cobol Rules with SonarQube

Some words about SonarQube and Cobol

(Wikipedia) : SonarQube (formerly Sonar)[1] is an open source platform developed by SonarSource for continuous inspection of code quality to perform automatic reviews with static analysis of code to detect bugs, code smells, and security vulnerabilities on 20+ programming languages. SonarQube offers reports on duplicated code, coding standards, unit tests, code coverage, code complexity, comments, bugs, and security vulnerabilities.[2][3]

We are interested in the commercial feature that allow us to scan and detect code quality defects for the Cobol language.

How to start a project to write custom rules.

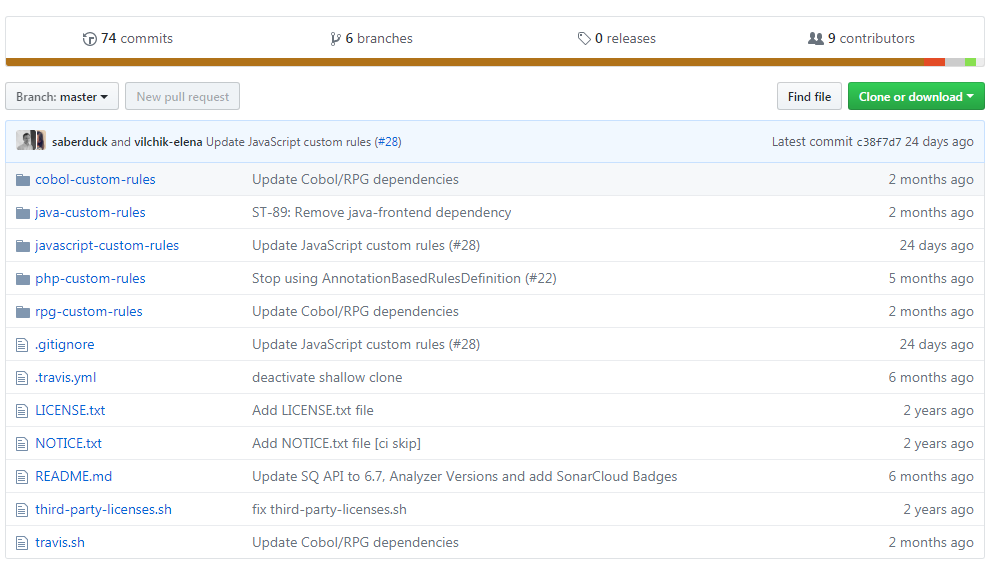

To start a new project, it will require to clone a bootstrap project provided by Sonarsource here.

Once you have clone the project, you should have a structure similar to this one :

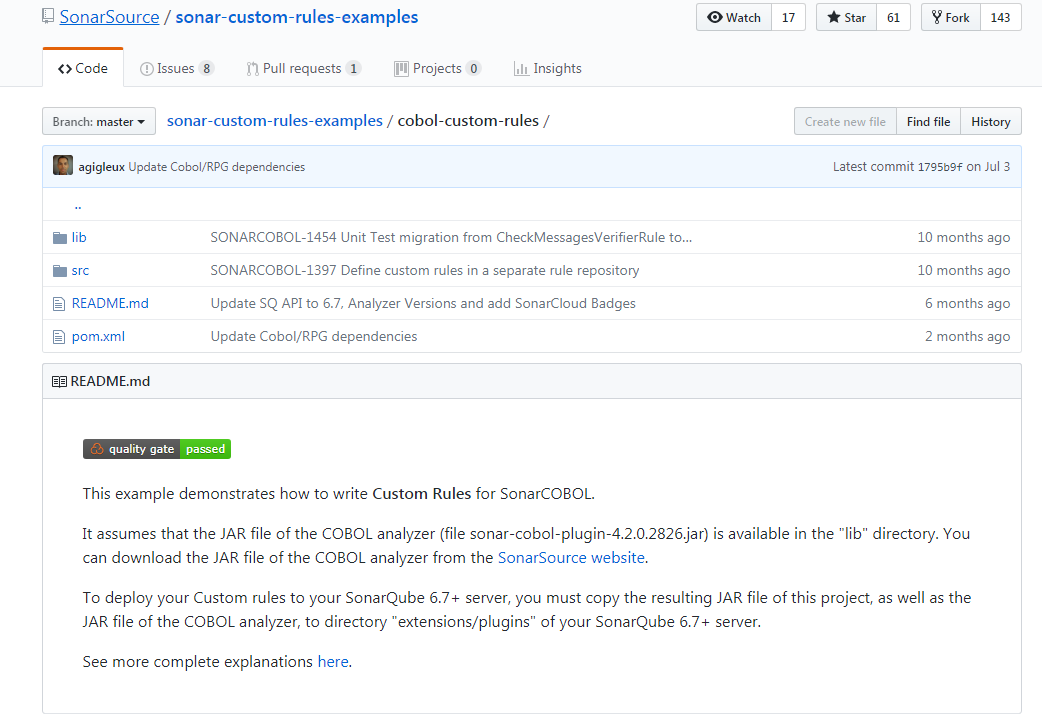

Once you clone the project only the folder cobol-custom-rules will interest us.

Download the Cobol Parser

The third step is to download the Cobol plugin library inside the lib folder.

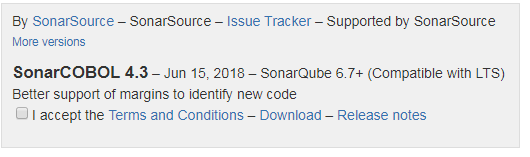

The link to download is there :

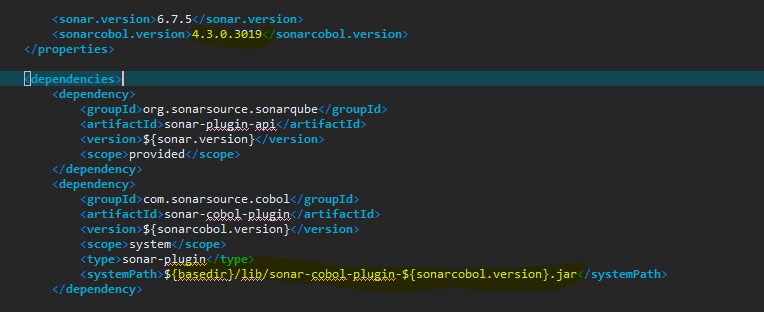

Open the pom.xml file and edit the following lines to match these ones :

Description of the project layout

CobolCustomRulesPlugin.java : this file is the main class of the plugin. It defines all properties and features provided by the plugin. Basically, in our context, it describes the list of rule repositories (catalog).

CobolCustomCheckRepository.java : this file describes the list of rules contains in this example repository. The rules are stored in the check subpackage.

ForbiddenCallRule.java, IssueOnEachFileRule.java, TrivialEvaluateRule.java : three examples of Cobol custom rule.

ForbiddenCallRuleTest.java, IssueOnEachFileRuleTest.javaTrivialEvaluateRuleTest.java are the unit tests for the custom rules.

How to write a rule

In my view (that may differs from the SonarSourcE/SonarQube developer view), SonarQube is provding two kind of rules :

- Basic / syntaxic rules where you can query tokens and AST to match your patterns

- Semantic analysis offering you the possibility to check the built SymbolTable and data type approximates

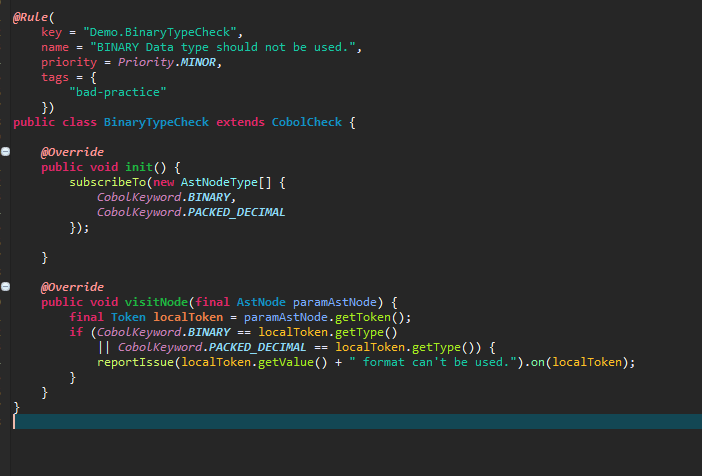

Cobol rules usually inherits from the com.sonarsource.cobol.api.ast.CobolCheck class.

If you have to access to the Symbole Table or data types, inherits your rule from com.sonarsource.cobol.checks.SymbolTableBasedCheck.

How it works ?

Basically each rule is performing a tree traversal to collect the nodes of interest. Each node of interest is submitted through a Visitor pattern and the methods visitNode visitFile etc.

The init part contains the main filter. It will reduce the scope of the nodes to be traversed according your selection.

In my example, I am filtering the AST to reduce it to the only nodes of type Token and more specifically BINARY and PACKED_DECIMAL.

The main useful methods

The methods getToken() from the class ASTNode and getTokens() will provide you the basic toolkit to read statement per statement and detects some easy patterns.

The method getType() will return you as invoked by its name a type. Since we have two kind of node ( statements and tokens), you can check the type against a specific Token or statement.

Using getType(), you can search for IF statements, PERFORM or specific data types BINARY that are represented as a single token.

The method reportIssue() has to be invoked to report an issue. The issue can be localized using a property files located in the src/main/resources folder.

The AstNode also provides basic traversal functions(just like in XPath) to obtain children, sibling, descendants and parents.

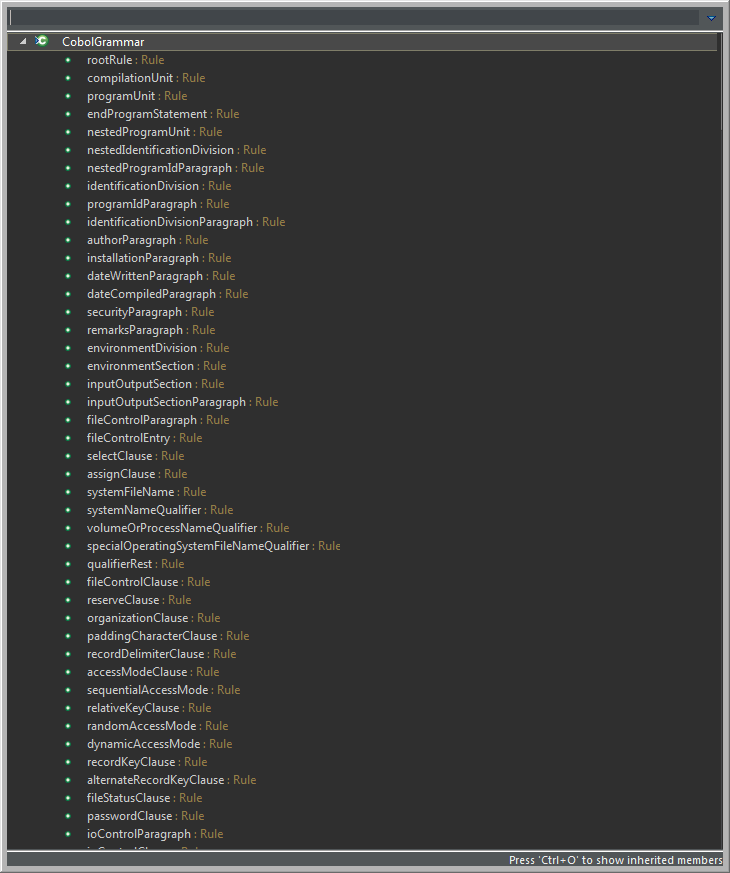

How to get the list of supported instructions ?

You have to manipulate an obfuscated field, called “A” containing the list of instructions supported by the Grammar.

How to test a rule ?

We can consider having three kind of tests :

- unit test : we are checking our rule against a simple Cobol file ( with one or more copies)

- integration test : we are checkinng our rule against a real project WITHOUT launching SonarQube

- real test : Rules are loaded into SonarQube and we are checking the results directly into the product.

Unit tests .

Your folder is containing two locations :

- COPY : the folder where you store the COPY required by your Cobol unit tests

- SRC : one Cobol program / file per unit test

The unit test skeleton should be similar to :

public class IfWithoutEndCheckTest {

private static final Logger LOGGER = LoggerFactory.getLogger(IfWithoutEndCheckTest.class);

@Test

public void testVisitNode() {

final IfWithoutEndCheck check = new IfWithoutEndCheck();

final CobolConfiguration cobolConfiguration = new CobolConfiguration();

cobolConfiguration.activateDialect(CobolConfiguration.DIALECT_MICRO_FOCUS_COBOL); /*1*/

cobolConfiguration.addCopyExtension("cpy");/*2*/

cobolConfiguration.addCopyExtension("COPY");/*2*/

cobolConfiguration.setPreprocessorsActivated(true);/*3*/

CobolCheckVerifier.verify(

new File("src/test/resources/checks/SRC/IfWithoutEnd.cbl"), /*4*/

cobolConfiguration,

check);

}

}The unit test is reading the Cobol program IfWithoutEnd.cbl to find issues.

Each issue have to be annotated with *> Noncompliant.

IDENTIFICATION DIVISION.

PROCEDURE DIVISION.

IF 1 = 1

DISPLAY ""

IF 1 = 1

DISPLAY ""

END-IF

DISPLAY ""

END-IF

IF 1 = 1 *> Noncompliant

DISPLAY ""

IF 1 = 1 *> Noncompliant

DISPLAY ""

.

IF 1 = 1 *> Noncompliant

DISPLAY ""

.

Writing integration tests

For those interested in testing a whole project without launching SonarQube, here is a snippet to write such test.

import java.io.File;

import java.util.ArrayList;

import java.util.List;

import org.apache.commons.io.FileUtils;

import org.junit.Ignore;

import org.junit.Test;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import com.sonarsource.cobol.squid.CobolConfiguration;

@Ignore("Enable for local analysis")

public class Comp3SpaceRuleIntTest {

private static final Logger LOGGER = LoggerFactory.getLogger(Comp3SpaceRuleIntTest.class);

@Test

public void testVisitNode() {

final Comp3SpaceRule check = new Comp3SpaceRule(); /*1*/

final CobolConfiguration cobolConfiguration = new CobolConfiguration();

cobolConfiguration.activateDialect(CobolConfiguration.DIALECT_MICRO_FOCUS_COBOL); /*2*/

cobolConfiguration.addCopyExtension("cpy");

cobolConfiguration.addCopyExtension("COPY");

File projectFolder = new File("projectFolder")

cobolConfiguration.setPreprocessorsActivated(true);

for (final File folder : projectFolder .listFiles()) { /*3*/

if (!folder.isDirectory()) {

continue;

}

final File copyBookFolder = new File(folder, "copyBook"); /*4*)

final File pgmFolder = new File(folder, "pgm");

if (copyBookFolder.exists()) {

LOGGER.info("Add copybook folder {}", copyBookFolder);

cobolConfiguration.addLibDirectory(copyBookFolder);

}

if (pgmFolder.exists()) {

LOGGER.info("Add copybook folder {}", pgmFolder);

cobolConfiguration.addLibDirectory(pgmFolder);

}

}

/*5*)

cobolConfiguration.addLibDirectory(new File("includeFolder"));

final List pgmFiles = new ArrayList<>();

for (final File pgm : FileUtils.listFiles(projectFolder, new String[] { /*6*/

"cbl"

}, true)) {

pgmFiles.add(pgm);

}

try {

CobolCheckVerifier.verify( /*7*/

pgmFiles,

cobolConfiguration,

check);

} catch (final Throwable t) {

t.printStackTrace();

}

}

}

Explanations :

- (1) : Pick your rule

- (2) : Pick your Dialect

- (3), (4) : Browse your Cobol source code repository and indexes the copy folders.

- (5) : Add manually missing copy folders

- (6) : Scan for all cobol programs

- (7) : Launch the analysis to detect all issues.

Caveats :

CobolCheckVerifier will scan for issues and check the presence of “NonCompliantIssues”. You will have to duplicate and fork this class to skip the behaviour if you don’t wish your scanning to be blocked.

Bonus & Hints

How to obtain Symbol and Data types information.

Your script has to extend SymbolTableCheck and check the query the AST on specific nodes like QName, DataNames to obtain the associated symbol table information.

If you pick the wrong node, the Symbol table is returning a non-sense value (the larger type possible) and your analysis may trigger false-positives.

I wrote a number of utility functions to overcome the difficulty to use the API :

Get the node holding the object’s name or failing in the attempt

AstNode getChildName(final AstNode child) {

if (child.is(A.dataName)) {

return child;

}

if (child.is(A.qualifiedDataName)) {

return child;

}

if (child.getNumberOfChildren() == 1) {

return getChildName(child.getChild(0));

}

if (child.hasChildren() && child.getChild(0).is(A.qualifiedDataName)) {

return getChildName(child.getChild(0));

}

throw new UnsupportedOperationException("Children " + child.getChildren());

}

Obtaining the DataItem : symbol and type of a variable etc

DataItem getDataItem(final AstNode node) {

if (node == null) {

return null;

}

final com.sonarsource.cobol.B.A dataNode = com.sonarsource.cobol.B.A.A(node, A);

LOGGER.debug("NodeWithItem {}->{}", node, node.getClass());

LOGGER.debug("DataItem>Symbol Qualified name {}", dataNode.B());

final DataItem dataItem = getNewSymbolTable().A(dataNode);

//printDataItem(dataItem);

return dataItem;

}

Dump the AST Tree

void outputTree(final AstNode node, final int l) {

LOGGER.info("{} Node {}, clazz={}, tokens {}", Strings.repeat(" ", l), node, node.getClass().getName(), node.getTokens());

for (final AstNode child : node.getChildren()) {

outputTree(child, l + 1);

}

}

Output the DataItem informations for debugging purpose

void printDataItem(final DataItem dataItem) {

LOGGER.info("DataItem> {} : picType {} picture {} dataName {} usage {} value {}-> parent {}", dataItem, dataItem.getPictureType(), dataItem.getPicture(),

dataItem.getDataName(),

dataItem.getUsage(),

dataItem.getValue(),

dataItem.getParent() == null ? "" : dataItem.getParent().getDataName());

}